How can I write a Python script to extract specific data from a large JSON file exported from Freshdesk (containing customer conversations, agent info, dates, etc.), and write it to an Excel file in chronological order, considering the file’s potential large size?

For handling and processing a large JSON file efficiently, here are some practical tips:

- Use a Streaming Parser: Libraries like

ijsoncan help you parse large JSON files incrementally. This avoids loading the entire file into memory, which is crucial for handling big datasets. - Extract Relevant Data: As you parse the JSON, focus on extracting only the fields you need—like conversation details, agent names, dates, and ticket IDs. This reduces the amount of data you need to process.

- Sort Data: After extracting the data, you can sort it by date. Store the extracted data in a list or a pandas DataFrame (which you can easily sort).

- Write to Excel: Use the

pandaslibrary to write your sorted data to an Excel file. It provides a straightforward way to handle dataframes and export them to Excel. - Memory Management: If the JSON file is extremely large, consider processing it in chunks and appending results to the Excel file incrementally to avoid memory issues.

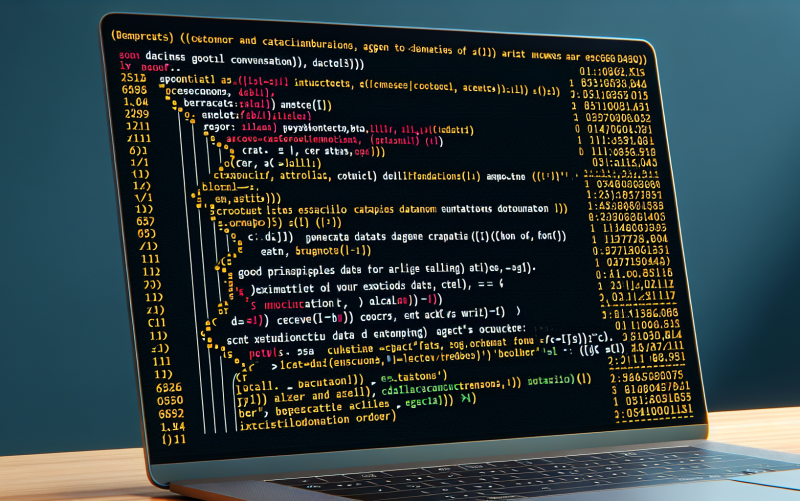

Here’s a rough outline of how you might approach this:

pythonCopy codeimport ijson

import pandas as pd

# Initialize an empty list to hold extracted data

data = []

# Open the JSON file and parse it

with open('large_file.json', 'r') as file:

parser = ijson.parse(file)

for prefix, event, value in parser:

# Adjust this part to match your JSON structure

if prefix.endswith('desired_field'):

# Extract relevant data

data.append({

'date': value['date'],

'agent': value['agent'],

'conversation': value['conversation'],

'ticket_id': value['ticket_id']

})

# Create a DataFrame

df = pd.DataFrame(data)

# Sort by date

df.sort_values(by='date', inplace=True)

# Write to Excel

df.to_excel('output.xlsx', index=False)

This approach should help you manage the data efficiently and get it into a format that’s easy to work with.